We might need to both turn to a different technique to increase trust and acceptance of decision-making algorithms, or question the want to rely solely on AI for such impactful selections in the first place. With explainable AI, a business can troubleshoot and enhance mannequin performance while serving to stakeholders perceive the behaviors of AI models. Investigating mannequin behaviors through monitoring mannequin insights on deployment status, fairness, quality and drift is important to scaling AI. XAI implements particular methods and strategies to guarantee that every decision made through the ML process may be traced and explained. AI, however, typically arrives at a outcome using an ML algorithm, but the architects of the AI techniques don’t fully perceive how the algorithm reached that end result. This makes it onerous to examine for accuracy and results in lack of management, accountability and auditability.

New analysis in interpretable AI continues to advance, with innovations like self-explaining AI models that integrate transparency instantly into their design. Explainability enables organizations to determine and mitigate biases, making certain moral AI use in hiring, lending, healthcare, and beyond. For many use instances, similar to medical analysis, loan decisions, or coverage modeling, understanding that a characteristic is correlated with an consequence just isn’t sufficient. In algorithmic buying and selling, transparency is increasingly demanded by institutional investors. XAI helps deconstruct opaque strategies, revealing how market alerts, sentiment indicators, or historic patterns drive trading choices. LRP distributes the mannequin’s output relevance backward by way of every layer to identify which neurons and inputs had probably the most influence.

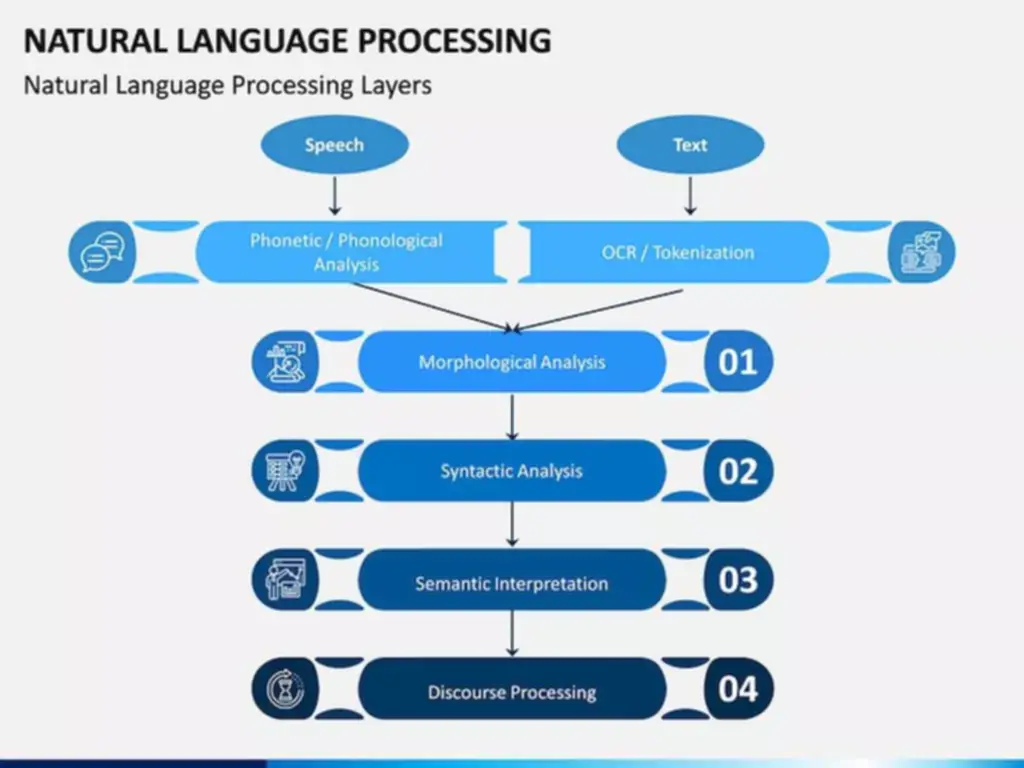

Guaranteeing equity through explainability is crucial for sustaining moral requirements and avoiding discriminatory practices. However, perhaps the most important hurdle of explainable AI of all is AI itself, and the breakneck pace at which it is evolving. Interrogating the selections of a model that makes predictions based on clear-cut things like numbers is a lot simpler than interrogating the selections of a model that depends on unstructured data like pure language or uncooked pictures.

Challenges In Implementing Explainable Ai

AI refers to any system that mimics human intelligence, while explainable AI particularly focuses on making AI fashions transparent and comprehensible, making certain users can interpret and belief their outputs. While techniques like token attribution can offer some insights, its deep learning architecture features largely as a “black field,” which means its decision-making process isn’t inherently transparent. AI is increasingly https://www.globalcloudteam.com/ used in crucial purposes, from healthcare to finance, and its decisions can have real-world penalties. By prioritizing transparency, organizations can improve belief, compliance, and performance.

- AI, however, typically arrives at a outcome utilizing an ML algorithm, however the architects of the AI methods don’t totally perceive how the algorithm reached that end result.

- However, understanding why an autonomous vehicle makes a specific choice is crucial for safety.

- Technical complexity drives the necessity for more refined explainability methods.

- In the automotive industry, particularly for autonomous autos, explainable AI helps in understanding the choices made by the AI techniques, corresponding to why a vehicle took a specific action.

Instantiating The Prediction Model And Training It On (x_train, Y_train)

In the automotive trade, particularly for autonomous autos, explainable AI helps in understanding the choices made by the AI methods, corresponding to why a vehicle took a particular motion. Bettering security and gaining public belief in autonomous autos depends closely on explainable AI. Methods for creating explainable AI have been developed and utilized across all steps of the ML lifecycle. Methods exist for analyzing the info used to develop models (pre-modeling), incorporating interpretability into the structure of a system (explainable modeling), and producing post-hoc explanations of system conduct (post-modeling). Deciding On the best explanation method is dependent upon your mannequin sort and industry needs. Choice trees may work well for structured data, whereas SHAP or LIME may be needed for deep learning fashions.

Explainability lets developers talk immediately with stakeholders to indicate they take AI governance significantly. Compliance with rules is also increasingly vital in AI growth, so proving compliance assures the common public that a mannequin isn’t untrustworthy or biased. With AI being used in industries similar to Operational Intelligence healthcare and monetary providers, it is necessary to guarantee that the decisions these techniques make are sound and reliable. They should be free from biases that might, for example, deny an individual a mortgage for reasons unrelated to their monetary qualifications. AI instruments used for segmenting prospects and focusing on advertisements can benefit from explainability by offering insights into how choices are made, enhancing strategic decision-making and guaranteeing that advertising efforts are efficient and truthful. Regulatory frameworks usually mandate that AI techniques be free from biases that could result in unfair therapy of individuals based mostly on race, gender, or other protected characteristics.

As the sector continues to evolve, Explainable AI will play an increasingly important function in making certain that the benefits of AI are realized in a method that is ethical, truthful, and aligned with human values. This interdisciplinary method shall be crucial for creating XAI methods that aren’t solely technically sound but additionally user-friendly and aligned with human cognitive processes. Explainable AI makes synthetic intelligence models more manageable and understandable. This helps developers decide if an AI system is working as supposed, and uncover errors more rapidly. Self-interpretable models are, themselves, the explanations, and can be instantly learn and interpreted by a human.

Some of the most common self-interpretable fashions include decision bushes and regression fashions, including logistic regression. Explainable AI is a set of methods, ideas and processes that aim to assist AI developers and customers alike better perceive AI fashions, each in terms of their algorithms and the outputs generated by them. AI fashions used for diagnosing diseases or suggesting therapy options should provide clear explanations for his or her suggestions. In flip, this helps physicians perceive the idea of the AI’s conclusions, making certain that choices are dependable in critical medical eventualities. The inherent complexity of contemporary software program methods, particularly in AI and machine studying, creates a big hurdle for explainability.

Understand the significance of creating a defensible evaluation process and persistently categorizing every use case into the suitable threat tier.

XAI’s potential to essentially reshape the connection between people and AI methods units it apart. Explainable AI, at its core, seeks to bridge the gap between the complexity of modern machine learning models and the human need for understanding and belief. Explainable AI encompasses a set of techniques, tools, and methodologies designed to make Machine Studying fashions and their predictions clear to human customers.

When mannequin performance drops or anomalies arise, explainability is crucial for debugging. Methods such as function attribution and counterfactual analysis assist engineers isolate the causes of errors, reduce explainable ai benefits false positives, and fine-tune performance in complex, high-stakes deployments. XAI helps security personnel perceive why specific activities are flagged, decreasing false alarms and enhancing accuracy. In 2023, reports from The Guardian highlighted issues over opaque AI surveillance methods in public spaces. These models are inherently interpretable because of their easier buildings, that means their decision-making course of can be simply understood without further tools. They are often most popular in high-stakes industries like finance and healthcare, the place transparency is essential.

Deixa una Resposta